SLUB 03:partial和cpu partial 2017-01-14

主要介绍partial和cpu partial的产生.

内核未定义 CONFIG_NUMA

partial没有指明是node partial还是cpu partial时, 则指的是node partial.

1. node partial的产生

在cpu0上执行newslabobjects -> new_slab, 由于可能睡眠, 之后可能运行在cpu1上. 这时若cpu1的c->page非空, 则根据情况, 可能将其放入node partial中.

linux-3.10.86/mm/slub.c

__slab_alloc

|--local_irq_save(flags);

|--freelist =new_slab_objects //buddy的page的首个obj

| |--page = new_slab(s, flags, node); //在cpu0上

| |--c = __this_cpu_ptr(s->cpu_slab) //进程可能迁移到cpu1上

| |--if (c->page) flush_slab(s, c);

flush_slab(struct kmem_cache *s, struct kmem_cache_cpu *c)

|-- deactivate_slab(s, c->page, c->freelist)

static void deactivate_slab(struct kmem_cache *s, struct page *page, void *freelist)

{

/*

while循环前:

obj_t2 -> obj_t1 -> NULL

^

@page->freelist

obj_03 -> obj_02 -> obj_01 -> NULL

^

@freelist

循环单次后:

obj_03 -> obj_t2 -> obj_t1 -> NULL

^

page->freelist

obj_02 -> obj_01 -> NULL

^

freelist

再来一回:

obj_02 -> obj_03 -> obj_t2 -> obj_t1 -> NULL

^

page->freelist

obj_01 -> NULL

^

freelist

问题:为何不直接修改指针把两个链表串起来, 而是要一个一个object的放到链表中?

答:因为要修改counters, 所以要一个一个数.

*/

while (freelist && (nextfree = get_freepointer(s, freelist))) {

...

}

....

/*

如果这里freelist为NULL, 说明@freelist传入时就为NULL

*/

/*把the last one也接上*/

if (freelist) {

new.inuse--;

/*

new->freelist

v

obj_01 -> obj_02 -> obj_03 -> obj_t2 -> obj_t1 -> NULL

^

freelist

*/

set_freepointer(s, freelist, old.freelist);

new.freelist = freelist;

} else

...

new.frozen = 0;

//有M_FREE, M_PARTIAL等情况, 这里只看M_PARTIAL

...

if (m == M_PARTIAL) {

/*问题:为何不是put_cpu_partial()?*/

add_partial(n, page, tail);

}

}

SLUB 04:tid 2017-01-14

1. tid

linux-3.10.86/include/linux/slub_def.h

struct kmem_cache_cpu {

...

unsigned long tid; /* Globally unique transaction id */

..

};

linux-3.10.86/mm/slub.c

#ifdef CONFIG_PREEMPT

/*

* Calculate the next globally unique transaction for disambiguiation

* during cmpxchg. The transactions start with the cpu number and are then

* incremented by CONFIG_NR_CPUS.

问题:一次不是加1, 而是加CONFIGNRCPUS, 为啥?

答:这个设计是为了让任何时刻每个cpu的tid值都不一样.

不过上面的注释有点老, 因为实际并不是加CONFIGNRCPUS, 而是TID_STEP.

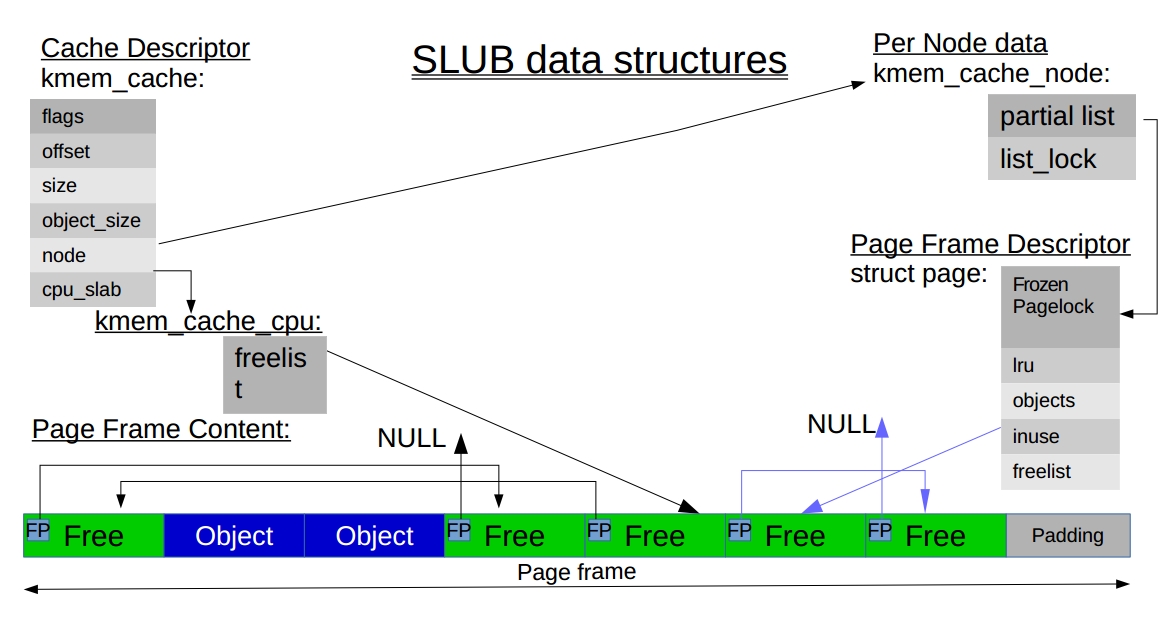

SLUB 01:the SLUB allocator的相关数据结构和主要流程 2017-01-12

文来自本人旧博客: blog.163.com/awaken_ing/blog/static/1206131972016316114114855

平台 ARM Versatile Express for Cortex-A9 SMP

内核版本 3.10.86 (未定义 CONFIG_NUMA)

1. 概览

The SLUB allocator 相比SLAB, 试图 remove the metadata overhead inside slabs, reduce the number of caches, and so on. The only metadata present in the SLUB allocator is the in-object “next-free-object” pointer, which allows us to link free objects together. struct kmem_cache 的成员 int offset 用来指明 指针在 object 中的偏移量, 这个指针指向的是下一个可用的object. How does the allocator manage to find the first free object? The answer lies in the approach of saving a pointer to such an object inside each page struct associated with the slab page. SLUB allocator 没有SLAB的full list 和 empty list.

图片来自 http://events.linuxfoundation.org/sites/events/files/slides/slaballocators.pdf

linux-3.10.86/mm/slub.c

slab_alloc() -> slab_alloc_node()

slab_alloc_node(struct kmem_cache *s, ...

{

struct kmem_cache_cpu *c=__this_cpu_ptr(s->cpu_slab);

object = c->freelist;

if fastpath {

void *next_object = get_freepointer_safe(s, object);

c->freelist=next_object; //via this_cpu_cmpxchg_double()

}else

...

return object;

}

static inline void *get_freepointer(struct kmem_cache *s, void *object)

{

return *(void **)(object + s->offset);

}

调度器, 从lost wake-up problem说起 2017-01-11

文来自本人的旧博客 blog.163.com/awaken_ing/blog/static/1206131972016124113539444/

0. 引言

The lost wake-up problem 请参考 http://www.linuxjournal.com/article/8144

本篇主要解释为何修改后的代码没有问题.

修改后的代码为:

1 set_current_state(TASK_INTERRUPTIBLE);

2 spin_lock(&list_lock);

3 if(list_empty(&list_head)) {

4 spin_unlock(&list_lock); //如果这里面让出cpu?

//如果在这个点被生产者唤醒会如何?

5 schedule();

6 spin_lock(&list_lock);

7 }

8 set_current_state(TASK_RUNNING);

9

10 /* Rest of the code ... */

11 spin_unlock(&list_lock);

仅检查TIF_NEED_RESCHED,不检查preempt_count? 2017-01-11

1. 何时设置 TIFNEEDRESCHED

任务task01还没有完成, 但调度器认为 其运行的够久了, 就设置TIFNEEDRESCHED, 这样, 在合适的时机, task01就会被调度走, 由其他task使用cpu.

除了schedulertick会设置外, 当一个优先级高的进程进入可执行状态的时候, trytowakeup()也会设置这个标志。

2. 不检查preempt_count?

例 linux-3.10.86/arch/arm/kernel/entry-armv.S

__vector_irq()

|--__irq_usr() @entry-armv.S

| |--usr_entry()

| |--irq_handler()

| |--ret_to_user_from_irq() @entry-common.S

| | |--work_pending()

| | | |--do_work_pending @signal.c

do_work_pending

{

do {

if (likely(thread_flags & _TIF_NEED_RESCHED))

schedule();

else

...

}

}