SLUB 01:the SLUB allocator的相关数据结构和主要流程

文来自本人旧博客: blog.163.com/awaken_ing/blog/static/1206131972016316114114855

平台 ARM Versatile Express for Cortex-A9 SMP

内核版本 3.10.86 (未定义 CONFIG_NUMA)

1. 概览

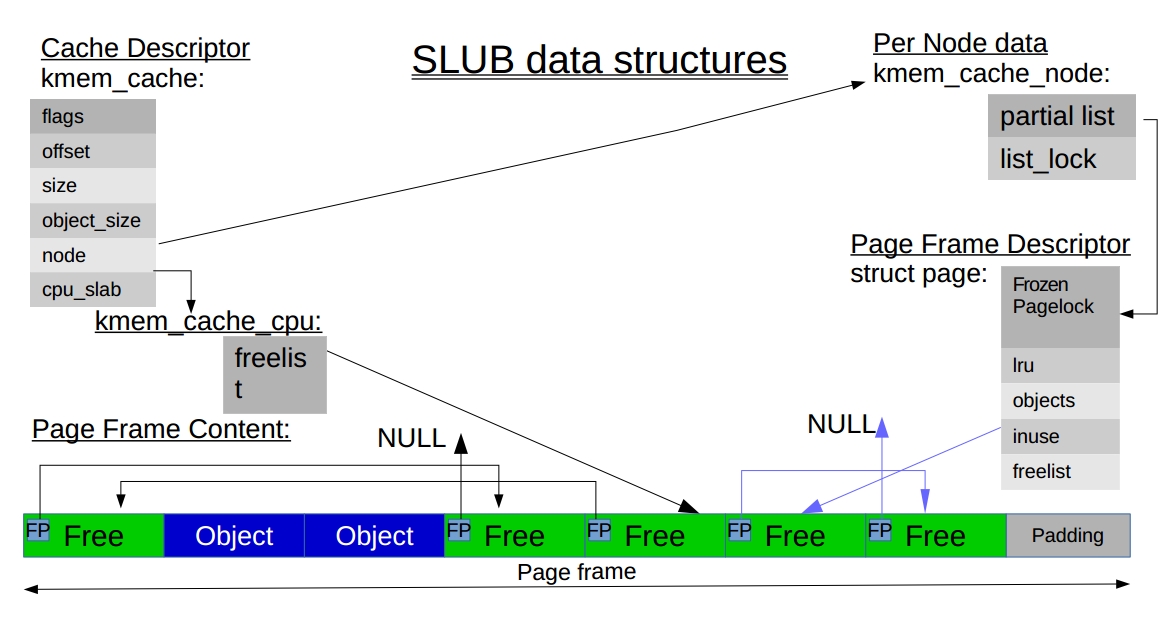

The SLUB allocator 相比SLAB, 试图 remove the metadata overhead inside slabs, reduce the number of caches, and so on. The only metadata present in the SLUB allocator is the in-object “next-free-object” pointer, which allows us to link free objects together. struct kmem_cache 的成员 int offset 用来指明 指针在 object 中的偏移量, 这个指针指向的是下一个可用的object. How does the allocator manage to find the first free object? The answer lies in the approach of saving a pointer to such an object inside each page struct associated with the slab page. SLUB allocator 没有SLAB的full list 和 empty list.

图片来自 http://events.linuxfoundation.org/sites/events/files/slides/slaballocators.pdf

linux-3.10.86/mm/slub.c

slab_alloc() -> slab_alloc_node()

slab_alloc_node(struct kmem_cache *s, ...

{

struct kmem_cache_cpu *c=__this_cpu_ptr(s->cpu_slab);

object = c->freelist;

if fastpath {

void *next_object = get_freepointer_safe(s, object);

c->freelist=next_object; //via this_cpu_cmpxchg_double()

}else

...

return object;

}

static inline void *get_freepointer(struct kmem_cache *s, void *object)

{

return *(void **)(object + s->offset);

}

2. 从slowpath看数据结构和流程

slaballocnode()中的slowpath为 _slaballoc().

struct kmem_cache {

struct kmem_cache_cpu __percpu *cpu_slab;

...

struct list_head list; /* List of slab caches */

...

struct kmem_cache_node *node[MAX_NUMNODES];

};

struct kmem_cache_cpu {

void **freelist; /* Pointer to next available object */

...

struct page *page; /* The slab from which we are allocating */

struct page *partial; /* Partially allocated frozen slabs */

...

};

执行slaballoc()这个slowpath的原因是 kmemcache per cpu的freelist的object的单向链表形式的object 已用完 ( kmemcache->cpuslab->freelist == NULL ).

__slab_alloc()中先使用kmemcache per cpu的page的freelist: cpuslab->freelist=cpuslab->page->freelist; cpuslab->page->freelist=NULL;

如果也用完了, 则尝试 cpu_slab->partial及其链表上的page的object.

如果还是没有object, 也就是per cpu上的object都耗光了, 则执行slaballoc()中的newslabobjects():

使用 kmemcachenode->partial->next (指向page的lru)所在的page, 总共有nrpartial个这样的page, 取freelist不为空的page. 这里采取的是批量操作方式, 取出的第一个page划给c->page, 其他page放入cpu partial.

如果也没有, 则执行new_slab(). 如下:

new_slab_objects

|--get_partial

| |--//从struct kmem_cache_node的partial这个双向链表中找, 如果找到, 则划入 c->page和struct kmem_cache_cpu 的page链表 (通过struct page的next)

| |--get_partial_node

|--fallback to new_slab()

| |--allocate_slab

| | |--alloc_slab_page(..., struct kmem_cache的oo) 从buddy system分配, order根据oo来

| | |--fallback to alloc_slab_page(..., struct kmem_cache的min) order根据min来

关于struct kmemcacheorder_objects:

allocate_slab

|--alloc_pages(..., oo_order(oo))

|--page->objects = oo_objects(oo);

成员x的高16bit为order, 低16bit为struct page的unsigned objects.

struct kmemcacheorder_objects { unsigned long x; };

3. 自问自答

问:有些情况下, 一个slabs要占用多个pages, 说说有啥不同?

答: newslab() 从buddy system中分配compound page, 故compound page该有的特征都有, 具体特征见 linux-3.10.86/mm/pagealloc.c:prepnewpage().

compound page中只有head page设置PGSlab, tail pages没有设置PGSlab.

new_slab

{

__SetPageSlab(page)

}

4. 参考资料

https://lwn.net/Articles/229984/

http://events.linuxfoundation.org/sites/events/files/slides/slaballocators.pdf

5. 附SLUB时struct page结构体struct page {

/* First double word block

unsigned long flags;

struct addressspace mapping;

/ Second double word */

struct {

union {

pgofft index; /* Our offset within mapping. /

void *freelist; / slub/slob first free object /

bool pfmemalloc;

};

union {

unsigned counters;

struct {

union {

atomic_t _mapcount;

struct { / SLUB /

unsigned inuse:16;

unsigned objects:15;

unsigned frozen:1;

};

int units; / SLOB /

};

atomic_t _count; / Usage count, see below. /

};

};

}; / end of Second double word /

/ Third double word block /

union {

struct list_head lru;

struct { / slub per cpu partial pages /

struct page *next; / Next partial slab /

short int pages;

short int pobjects;

};

struct list_head list; / slobs list of pages /

struct slab *slab_page; / slab fields /

}; / end of Third double word block /

/ Remainder is not double word aligned /

union {

unsigned long private;

spinlockt ptl;

struct kmemcache *slab_cache; / SL[AU]B: Pointer to slab /

struct page *first_page; / Compound tail pages */

};

...

};

struct page {

/* First double word block

unsigned long flags;

struct addressspace mapping;

/ Second double word */

struct {

union {

pgofft index; /* Our offset within mapping. /

void *freelist; / slub/slob first free object /

bool pfmemalloc;

};

union {

unsigned counters;

struct {

union {

atomic_t _mapcount;

struct { / SLUB /

unsigned inuse:16;

unsigned objects:15;

unsigned frozen:1;

};

int units; / SLOB /

};

atomic_t _count; / Usage count, see below. /

};

};

}; / end of Second double word /

/ Third double word block /

union {

struct list_head lru;

struct { / slub per cpu partial pages /

struct page *next; / Next partial slab /

short int pages;

short int pobjects;

};

struct list_head list; / slobs list of pages /

struct slab *slab_page; / slab fields /

}; / end of Third double word block /

/ Remainder is not double word aligned /

union {

unsigned long private;

spinlockt ptl;

struct kmemcache *slab_cache; / SL[AU]B: Pointer to slab /

struct page *first_page; / Compound tail pages */

};

...

};

本文地址: https://awakening-fong.github.io/posts/mm/slub_slab_alloc

转载请注明出处: https://awakening-fong.github.io

若无法评论, 请打开JavaScript, 并通过proxy.

blog comments powered by Disqus